#computer vision in artificial intelligence

Explore tagged Tumblr posts

Text

Enhancing AI Services with Computer Vision: Transforming Perception into Intelligence

Experience the transformative potential of computer vision in artificial intelligence. Witness how this advanced technology empowers solutions to extract, comprehend, and respond to data from images, videos, and visual inputs. By harnessing cutting-edge machine learning and artificial intelligence, computer vision becomes the digital equivalent of human vision, revolutionizing industries. Explore the seamless integration of AI and computer vision, revolutionizing how we interpret the visual world.

#computer vision in artificial intelligence#legacy systems#legacy migration#travel management company#online travel agency#Customer Data Platform#Conversational AI#AI chatbot

1 note

·

View note

Text

Bayesian Active Exploration: A New Frontier in Artificial Intelligence

The field of artificial intelligence has seen tremendous growth and advancements in recent years, with various techniques and paradigms emerging to tackle complex problems in the field of machine learning, computer vision, and natural language processing. Two of these concepts that have attracted a lot of attention are active inference and Bayesian mechanics. Although both techniques have been researched separately, their synergy has the potential to revolutionize AI by creating more efficient, accurate, and effective systems.

Traditional machine learning algorithms rely on a passive approach, where the system receives data and updates its parameters without actively influencing the data collection process. However, this approach can have limitations, especially in complex and dynamic environments. Active interference, on the other hand, allows AI systems to take an active role in selecting the most informative data points or actions to collect more relevant information. In this way, active inference allows systems to adapt to changing environments, reducing the need for labeled data and improving the efficiency of learning and decision-making.

One of the first milestones in active inference was the development of the "query by committee" algorithm by Freund et al. in 1997. This algorithm used a committee of models to determine the most meaningful data points to capture, laying the foundation for future active learning techniques. Another important milestone was the introduction of "uncertainty sampling" by Lewis and Gale in 1994, which selected data points with the highest uncertainty or ambiguity to capture more information.

Bayesian mechanics, on the other hand, provides a probabilistic framework for reasoning and decision-making under uncertainty. By modeling complex systems using probability distributions, Bayesian mechanics enables AI systems to quantify uncertainty and ambiguity, thereby making more informed decisions when faced with incomplete or noisy data. Bayesian inference, the process of updating the prior distribution using new data, is a powerful tool for learning and decision-making.

One of the first milestones in Bayesian mechanics was the development of Bayes' theorem by Thomas Bayes in 1763. This theorem provided a mathematical framework for updating the probability of a hypothesis based on new evidence. Another important milestone was the introduction of Bayesian networks by Pearl in 1988, which provided a structured approach to modeling complex systems using probability distributions.

While active inference and Bayesian mechanics each have their strengths, combining them has the potential to create a new generation of AI systems that can actively collect informative data and update their probabilistic models to make more informed decisions. The combination of active inference and Bayesian mechanics has numerous applications in AI, including robotics, computer vision, and natural language processing. In robotics, for example, active inference can be used to actively explore the environment, collect more informative data, and improve navigation and decision-making. In computer vision, active inference can be used to actively select the most informative images or viewpoints, improving object recognition or scene understanding.

Timeline:

1763: Bayes' theorem

1988: Bayesian networks

1994: Uncertainty Sampling

1997: Query by Committee algorithm

2017: Deep Bayesian Active Learning

2019: Bayesian Active Exploration

2020: Active Bayesian Inference for Deep Learning

2020: Bayesian Active Learning for Computer Vision

The synergy of active inference and Bayesian mechanics is expected to play a crucial role in shaping the next generation of AI systems. Some possible future developments in this area include:

- Combining active inference and Bayesian mechanics with other AI techniques, such as reinforcement learning and transfer learning, to create more powerful and flexible AI systems.

- Applying the synergy of active inference and Bayesian mechanics to new areas, such as healthcare, finance, and education, to improve decision-making and outcomes.

- Developing new algorithms and techniques that integrate active inference and Bayesian mechanics, such as Bayesian active learning for deep learning and Bayesian active exploration for robotics.

Dr. Sanjeev Namjosh: The Hidden Math Behind All Living Systems - On Active Inference, the Free Energy Principle, and Bayesian Mechanics (Machine Learning Street Talk, October 2024)

youtube

Saturday, October 26, 2024

#artificial intelligence#active learning#bayesian mechanics#machine learning#deep learning#robotics#computer vision#natural language processing#uncertainty quantification#decision making#probabilistic modeling#bayesian inference#active interference#ai research#intelligent systems#interview#ai assisted writing#machine art#Youtube

6 notes

·

View notes

Text

Tom and Robotic Mouse | @futuretiative

Tom's job security takes a hit with the arrival of a new, robotic mouse catcher.

TomAndJerry #AIJobLoss #CartoonHumor #ClassicAnimation #RobotMouse #ArtificialIntelligence #CatAndMouse #TechTakesOver #FunnyCartoons #TomTheCat

Keywords: Tom and Jerry, cartoon, animation, cat, mouse, robot, artificial intelligence, job loss, humor, classic, Machine Learning Deep Learning Natural Language Processing (NLP) Generative AI AI Chatbots AI Ethics Computer Vision Robotics AI Applications Neural Networks

Tom was the first guy who lost his job because of AI

(and what you can do instead)

⤵

"AI took my job" isn't a story anymore.

It's reality.

But here's the plot twist:

While Tom was complaining,

others were adapting.

The math is simple:

➝ AI isn't slowing down

➝ Skills gap is widening

➝ Opportunities are multiplying

Here's the truth:

The future doesn't care about your comfort zone.

It rewards those who embrace change and innovate.

Stop viewing AI as your replacement.

Start seeing it as your rocket fuel.

Because in 2025:

➝ Learners will lead

➝ Adapters will advance

➝ Complainers will vanish

The choice?

It's always been yours.

It goes even further - now AI has been trained to create consistent.

//

Repost this ⇄

//

Follow me for daily posts on emerging tech and growth

#ai#artificialintelligence#innovation#tech#technology#aitools#machinelearning#automation#techreview#education#meme#Tom and Jerry#cartoon#animation#cat#mouse#robot#artificial intelligence#job loss#humor#classic#Machine Learning#Deep Learning#Natural Language Processing (NLP)#Generative AI#AI Chatbots#AI Ethics#Computer Vision#Robotics#AI Applications

4 notes

·

View notes

Text

AI model speeds up high-resolution computer vision

The system could improve image quality in video streaming or help autonomous vehicles identify road hazards in real-time.

Adam Zewe | MIT News

Researchers from MIT, the MIT-IBM Watson AI Lab, and elsewhere have developed a more efficient computer vision model that vastly reduces the computational complexity of this task. Their model can perform semantic segmentation accurately in real-time on a device with limited hardware resources, such as the on-board computers that enable an autonomous vehicle to make split-second decisions.

youtube

Recent state-of-the-art semantic segmentation models directly learn the interaction between each pair of pixels in an image, so their calculations grow quadratically as image resolution increases. Because of this, while these models are accurate, they are too slow to process high-resolution images in real time on an edge device like a sensor or mobile phone.

The MIT researchers designed a new building block for semantic segmentation models that achieves the same abilities as these state-of-the-art models, but with only linear computational complexity and hardware-efficient operations.

The result is a new model series for high-resolution computer vision that performs up to nine times faster than prior models when deployed on a mobile device. Importantly, this new model series exhibited the same or better accuracy than these alternatives.

Not only could this technique be used to help autonomous vehicles make decisions in real-time, it could also improve the efficiency of other high-resolution computer vision tasks, such as medical image segmentation.

“While researchers have been using traditional vision transformers for quite a long time, and they give amazing results, we want people to also pay attention to the efficiency aspect of these models. Our work shows that it is possible to drastically reduce the computation so this real-time image segmentation can happen locally on a device,” says Song Han, an associate professor in the Department of Electrical Engineering and Computer Science (EECS), a member of the MIT-IBM Watson AI Lab, and senior author of the paper describing the new model.

He is joined on the paper by lead author Han Cai, an EECS graduate student; Junyan Li, an undergraduate at Zhejiang University; Muyan Hu, an undergraduate student at Tsinghua University; and Chuang Gan, a principal research staff member at the MIT-IBM Watson AI Lab. The research will be presented at the International Conference on Computer Vision.

A simplified solution

Categorizing every pixel in a high-resolution image that may have millions of pixels is a difficult task for a machine-learning model. A powerful new type of model, known as a vision transformer, has recently been used effectively.

Transformers were originally developed for natural language processing. In that context, they encode each word in a sentence as a token and then generate an attention map, which captures each token’s relationships with all other tokens. This attention map helps the model understand context when it makes predictions.

Using the same concept, a vision transformer chops an image into patches of pixels and encodes each small patch into a token before generating an attention map. In generating this attention map, the model uses a similarity function that directly learns the interaction between each pair of pixels. In this way, the model develops what is known as a global receptive field, which means it can access all the relevant parts of the image.

Since a high-resolution image may contain millions of pixels, chunked into thousands of patches, the attention map quickly becomes enormous. Because of this, the amount of computation grows quadratically as the resolution of the image increases.

In their new model series, called EfficientViT, the MIT researchers used a simpler mechanism to build the attention map — replacing the nonlinear similarity function with a linear similarity function. As such, they can rearrange the order of operations to reduce total calculations without changing functionality and losing the global receptive field. With their model, the amount of computation needed for a prediction grows linearly as the image resolution grows.

“But there is no free lunch. The linear attention only captures global context about the image, losing local information, which makes the accuracy worse,” Han says.

To compensate for that accuracy loss, the researchers included two extra components in their model, each of which adds only a small amount of computation.

One of those elements helps the model capture local feature interactions, mitigating the linear function’s weakness in local information extraction. The second, a module that enables multiscale learning, helps the model recognize both large and small objects.

“The most critical part here is that we need to carefully balance the performance and the efficiency,” Cai says.

They designed EfficientViT with a hardware-friendly architecture, so it could be easier to run on different types of devices, such as virtual reality headsets or the edge computers on autonomous vehicles. Their model could also be applied to other computer vision tasks, like image classification.

Keep reading.

Make sure to follow us on Tumblr!

#artificial intelligence#machine learning#computer vision#autonomous vehicles#internet of things#research#Youtube

3 notes

·

View notes

Text

¿

¡

#computer#computer vision#computer virus#ai#ai content#artificial intelligence#artists on tumblr#aesthetic#artistsoninstagram

2 notes

·

View notes

Text

7 Key Features to Include in CV Powered Parental App Development

Keeping kids safe online isn't easy. With new content and platforms emerging daily, parents need help. CV powered parental app development fills that gap with smart features built to monitor, protect, and support children in their digital lives. Here's a closer look at the core functionalities that make these apps truly powerful.

1. Real-Time AI Filtering of Visual Content

Kids see thousands of images and videos online every day. Not all of it is appropriate. Real-time AI filtering scans this content instantly, using computer vision to block nudity, violence, or other harmful visuals.

Filters content across browsers, apps, and social media.

Works instantly—no delay between detection and action.

Lets kids use platforms safely instead of blocking them completely.

This feature builds trust. Kids aren’t totally restricted, and parents feel secure knowing dangerous visuals won’t slip through.

2. Screen Time Management and Customizable Limits

Too much screen time can harm sleep, focus, and family time. CV powered parental app development includes tools that let parents set healthy usage boundaries.

Daily time limits for each app or device.

Scheduled downtimes during school, meals, or bedtime.

Alerts and summaries help parents track usage.

These tools promote balance without the need for constant arguments. Parents regain control without nagging. Kids learn responsibility.

3. Advanced S*xting Prevention

S*xting has become a serious issue among teens. AI and CV technology can detect suggestive or explicit content before it's sent.

Scans images stored or captured on the device.

Flags risky content and alerts parents discreetly.

Locks or hides questionable images until reviewed.

This feature not only prevents risky behavior but also opens doors for important conversations between parents and kids.

4. Social Media Monitoring for Child Safety

Many online risks come through social platforms. From cyberbullying to unwanted messages, social media is full of unseen dangers.

Monitors texts, images, and interactions on platforms like Instagram, TikTok, and Snapchat.

Alerts parents to dangerous messages or patterns.

Offers insights without reading every conversation.

Kids get to enjoy social apps. Parents gain peace of mind.

5. Seamless Device and App Management

Managing devices across a family can get messy. A solid CV powered parental app offers centralized control.

Manage multiple devices from one dashboard.

Allow or block specific apps with a tap.

Create profiles for each child with age-specific settings.

Everything stays synced and simple. No need to set rules on each device manually.

6. GPS Tracking and Geo-Fencing Capabilities

Online safety and physical safety go hand in hand. GPS tracking ensures that parents know where their kids are at all times.

Live location updates for all connected devices.

Set safe zones like school, home, or parks.

Get notified when kids enter or leave these zones.

This builds an extra layer of trust and security for both parents and children.

7. Tamper-Proof Security Features

Tech-savvy kids might try to bypass controls. These features make sure the rules stay in place.

Blocks attempts to uninstall or disable the app.

Alerts parents of suspicious device behavior.

Ensures protection remains active at all times.

With this, parents don't have to worry about kids finding workarounds.

Importance of AI in These Features

Computer vision and AI drive every feature mentioned above. Without them, the app wouldn’t be smart enough to adapt to fast-changing threats.

Leveraging AI to Detect Inappropriate Content

AI models trained on massive datasets learn to spot the signs of explicit content. They don't just flag obvious things. They detect context, background elements, and more.

Detects pornography, nudity, and violence in visual content.

Understands evolving trends and new forms of inappropriate content.

Continues learning from feedback and corrections.

This level of detection is not possible through simple keyword or link blocking.

How AI Enhances Real-Time Filtering

Parents need fast, seamless protection—not laggy apps. With AI, scanning happens instantly.

Content is analyzed as it loads or appears.

Harmful material is blocked before it’s visible.

No need to wait for reports or summaries.

That’s a huge relief for parents who want to let kids explore safely.

Ensuring Accuracy with AI-Powered Monitoring

Accuracy matters. Too many false alarms create frustration. Too few put kids at risk.

AI reduces false positives and negatives over time.

Trained on diverse, real-world data to ensure balance.

Refined continuously through usage.

Apps built with strong AI foundations provide reliable, consistent protection that adapts.

Building a CV Powered Parental App for future

Keeping up with tech is tough. Parents need tools that don’t become outdated a year later. Future-proofing should be part of your CV powered parental app development strategy.

Scalability and Customization Options

As families grow and kids get older, needs change. So should your app.

Add more devices or family members easily.

Adjust content filters based on age or maturity.

Scale up without needing to switch platforms.

This keeps the app useful for years.

Continuous Model Updates for Better Accuracy

Threats change constantly. What’s harmful today might look different tomorrow.

Frequent updates to AI models ensure up-to-date detection.

Feedback loops improve performance over time.

Keeps the app relevant as digital behavior evolves.

Parents stay ahead of online risks—not behind them.

User-Friendly Interfaces for Parents and Kids

A great app is only as good as its usability. If it’s hard to use, it won’t work.

Clean dashboards for parents to manage settings.

Simple alerts and insights, no tech jargon.

Child-friendly interfaces that respect their privacy.

This helps families adopt the app quickly and use it effectively.

Conclusion

AI is the secret weapon in CV powered parental app development. It empowers parents with tools that work in real-time, adapt to change, and protect without over-restricting. Kids can enjoy the internet safely while parents gain peace of mind.

The Power of CV Technology in Online Safety

Computer vision adds a much-needed dimension to digital parenting. It understands images and video—not just text. That’s essential in a world full of visual content. When combined with AI, it creates a safety net that’s both smart and scalable.

If you're considering building your own CV powered parental control app, look for a development partner who knows what they're doing. Firms like Idea Usher have already built AI-powered solutions that keep families safe while balancing freedom and protection. Their experience can help you launch a secure, reliable app that genuinely makes a difference.

Because at the end of the day, safety isn’t just about blocking content, it’s about CV powered parental control app revolutionizing the digital world that grows with your child.

0 notes

Text

#artificial intelligence services#machine learning solutions#AI development company#machine learning development#AI services India#AI consulting services#ML model development#custom AI solutions#deep learning services#natural language processing#computer vision solutions#AI integration services#AI for business#enterprise AI solutions#machine learning consulting#predictive analytics#AI software development#intelligent automation

0 notes

Text

Computer Vision

👁️🗨️ TL;DR – Computer Vision Lecture Summary (with emojis) 🔍 What It Is:Computer Vision (CV) teaches machines to “see” and understand images & videos like humans — but often faster and more consistently. Not to be confused with just image editing — it’s about interpretation. 🧠 How It Works:CV pipelines go from capturing images ➡️ cleaning them up ➡️ analyzing them with AI (mostly deep…

#ai#Artificial Intelligence#ChatGPT Deep Research#Computer Vision#Google Gemini Deep Research#image classification#vision systems

0 notes

Text

Explore the world of Night Vision Tech: its history, applications, and future innovations. Uncover how this groundbreaking technology shapes industries and solves puzzles alike.

#artificial intelligence#healthcare#news#robotics#technology#tech#energy#future#ai art#ai generated#night vision#night vale#night view#future technology#computing#computer#gadgets#futurism

1 note

·

View note

Text

How Computer Vision is Reducing Manufacturing Defects in Home Appliances

Discover how AI-powered computer vision is transforming home appliance manufacturing by automating quality control, defect detection, and predictive maintenance. Learn how leading brands like Whirlpool have reduced defects, improved efficiency, and cut costs with AI-driven manufacturing solutions. Explore how smart manufacturing can enhance production and ensure product reliability. Partner with Theta Technolabs for cutting-edge AI and computer vision solutions to optimize your manufacturing process.

#Computer vision in manufacturing#AI-powered quality control#Artificial Intelligence#Technology#Smart manufacturing with AI#AI in industrial quality control

0 notes

Text

Get Ready for a Hilarious and Heartwarming Nature Adventure

View On WordPress

#FriendsAreas#10th anniversary#55th birthday#animal behavior#animal bloopers#artificial intelligence#backyard adventures#backyard bugs#backyard discoveries#backyard nature#biodiversity#biodiversity data#biodiversity monitoring#bird watching#birds#bizarre plants#bug detection#Canada#Citizen Science#City Nature Challenge#City Nature Challenge 2025#Community Science#computer vision#crazy creatures#cutest animal faces#data collection#Earth Month#Easter Break#Easter weekend#eco-awareness

0 notes

Text

Project "ML.Satellite": Image Parser

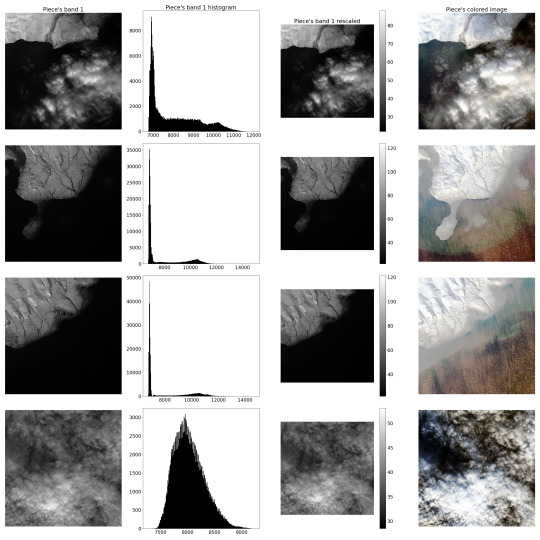

In order to speed up the "manufacturing" of the training dataset as much as possible, extreme automation is necessary. Hence, the next step was to create a semi-automatic satellite multispectral Image Parser.

Firstly, it should carve the smaller pieces from the big picture and adjust them linearly, providing radiometrical rescaling, since spectrometer produces somewhat distorted results compared to the actual radiance of the Earth's surface. These "pieces" will comprise the dataset. It was proposed to "manufacture" about 400 such "pieces" in a 500 by 500 "pixels" format.

P.S.: Below are example images of the procedure described above. (Novaya Zemlya Archipelago)

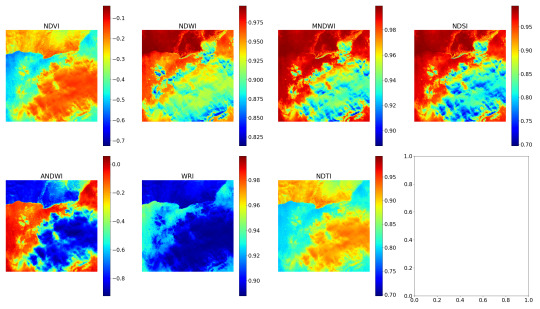

Secondly, it should calculate some remote sensing indexes. For this task, a list of empirical indexes was taken: NDVI, NDWI, MNDWI, NDSI, ANDWI (alternatively calculated NDWI), WRI and NDTI. Only several of them were useful for the project purposes.

P.S.2: The following are example images of the indexing procedure.

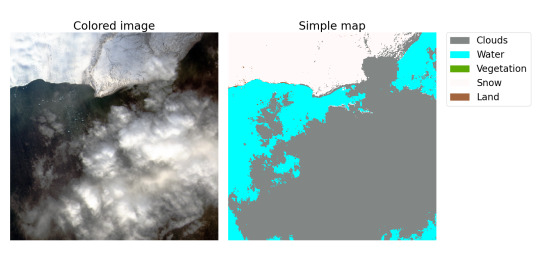

Lastly, it should compute the "labels" for the "pieces" describing a schematic map of the territory on image splitting this territory into several types according to the calculated indexes. To simplify segmentation, in our project, a territory can consist only of the following types: Clouds, Water (seas, oceans, rivers, lakes…), Vegetation (forests, jungles…), Snow and Land (this class includes everything else). And, of course, Parser should save the processed dataset and labels.

P.S.3: Below are sample image of a colored "piece" and a simple map based on the label assigned to this "piece". Map may seem a drop inaccurate and it's not surprising, since as far as I know, indexes are empirical and by definition cannot be precise. As a result, if it is possible to create a sufficiently accurate model that predicts analytical classification, then it may be possible to create a model that classifies optical images better than analytics.

#student project#machine learning#neural network#ai#artificial intelligence#computer vision#image segmentation#segmentation task#satellite#remote sensing#optical sensors#geoinformatics

0 notes

Text

Discover Rydot's ConvAI Platform, where generative AI transforms ideas into reality. Unleash the potential of intelligent assistance for your projects today.

0 notes

Text

The Role of IoT in Smart Healthcare: How Connected Devices are Revolutionizing Patient Monitoring and Hospital Management

The Internet of Things (IoT) is playing a transformative role in modern healthcare by enabling real-time patient monitoring, automating hospital operations, and ensuring proactive medical interventions. Senrysa Technologies integrates IoT-driven solutions into healthcare, allowing for seamless data collection, continuous monitoring, and smart decision-making.

One of the most impactful applications of IoT in healthcare is Remote Patient Monitoring (RPM). IoT-enabled wearable devices track vital health metrics such as heart rate, blood pressure, oxygen levels, and glucose levels in real time. In case of any irregularities, instant alerts are sent to healthcare providers, ensuring timely medical attention and reducing emergency hospital visits.

IoT also enhances Smart Hospital Management, where sensors track and monitor medical equipment, streamline inventory management, and ensure optimal utilization of resources. Automated tracking systems prevent shortages of essential medical supplies and minimize operational inefficiencies.

Additionally, IoT-based Emergency Alert Systems detect critical health conditions and automatically notify doctors, emergency responders, or family members, ensuring immediate intervention. By reducing hospital readmissions, preventing medical emergencies, and optimizing healthcare workflows, IoT is making healthcare more proactive than reactive.

Senrysa Technologies is leading the way in integrating IoT solutions for a more connected, responsive, and intelligent healthcare system that prioritizes patient safety and operational efficiency.

#Internet of Things (IoT) in Healthcare#Computer Vision in Healthcare#Electronic Health Record (EHR) Software#Artificial Intelligence (AI) in Healthcare#Smart Healthcare Tech

0 notes

Text

Giới thiệu chi tiết về học tăng cường: Phương pháp và ứng dụng

🔍 Học tăng cường (Reinforcement Learning) không còn là khái niệm xa lạ với những người yêu thích công nghệ AI. Đây là một phương pháp học tập cho phép máy móc tự khám phá và hoàn thiện kỹ năng thông qua việc thử nghiệm và phản hồi từ môi trường. 🧠✨

💡 Bạn có biết? Học tăng cường đã tạo nên những bước tiến vượt bậc, từ việc giúp AI đánh bại con người trong các trò chơi phức tạp 🎮 như cờ vây, đến việc tối ưu hóa các hệ thống thực tế như quản lý năng lượng 🌱, xe tự hành 🚗, và thậm chí cả dịch vụ khách hàng 📞!

📈 Phương pháp này hoạt động dựa trên nguyên lý học hỏi từ phần thưởng và hình phạt 🔄, giúp mô hình AI không chỉ ra quyết định mà còn đưa ra những chiến lược tối ưu nhất để đạt mục tiêu. Điều này mở ra tiềm năng vô hạn trong các lĩnh vực như tài chính 💰, y tế 🏥, và giáo dục 📚.

👉 Bạn muốn tìm hiểu sâu hơn về cách thức hoạt động, các ứng dụng nổi bật và lý do tại sao học tăng cường lại được xem là tương lai của trí tuệ nhân tạo? Hãy đọc bài viết chi tiết tại đây: Giới thiệu chi tiết về học tăng cường: Phương pháp và ứng dụng

📢 Đừng quên chia sẻ bài viết này để bạn bè của bạn cũng cập nhật thêm kiến thức thú vị về AI nhé! 🤝💬

0 notes

Text

Mô hình ResNet: Đột phá trong nhận diện hình ảnh

🌟 Bạn đã bao giờ tự hỏi ��iều gì làm nên sự kỳ diệu của công nghệ nhận diện hình ảnh hiện đại chưa? 🧠 Đó chính là nhờ các mô hình học sâu như ResNet – một trong những bước tiến lớn trong lĩnh vực AI và Deep Learning! 🚀

🔥 ResNet (Residual Network) không chỉ đơn giản hóa việc xây dựng mạng neural sâu mà còn giúp giải quyết vấn đề nan giải của độ sâu mạng (vanishing gradient). Với khả năng vượt trội, ResNet đã đặt nền móng cho nhiều ứng dụng thực tế như:

📱 Nhận diện khuôn mặt trên smartphone.

🛍️ Phân loại sản phẩm trong thương mại điện tử.

🚗 Hệ thống lái tự động trên ô tô thông minh.

💡 Điều đặc biệt ở ResNet chính là cơ chế Residual Learning – một sáng kiến giúp mạng neural học được từ những khác biệt nhỏ nhất, đảm bảo hiệu suất vượt trội ngay cả khi độ sâu mạng tăng cao. 🤯

🎯 Tò mò muốn biết thêm về cách ResNet hoạt động và tại sao nó lại là "ngôi sao" trong lĩnh vực nhận diện hình ảnh? Click ngay bài viết chi tiết tại đây: 👉 Mô hình ResNet: Đột phá trong nhận diện hình ảnh

❤️ Nếu bạn thấy hữu ích, đừng ngại thả ❤️ và chia sẻ để lan tỏa kiến thức nhé! 🌐

0 notes